I’m recalling about a fantastic manuscript (Davidson A. – Opponent Modeling in Poker: Learning and Acting in a Hostile and Uncertain Environment) that I discovered years ago. Back then, I was immersed in coding projects, and each new understanding felt like a mini-revelation. Now I’m recalling some of the highlights, but with a rather blurry lens.

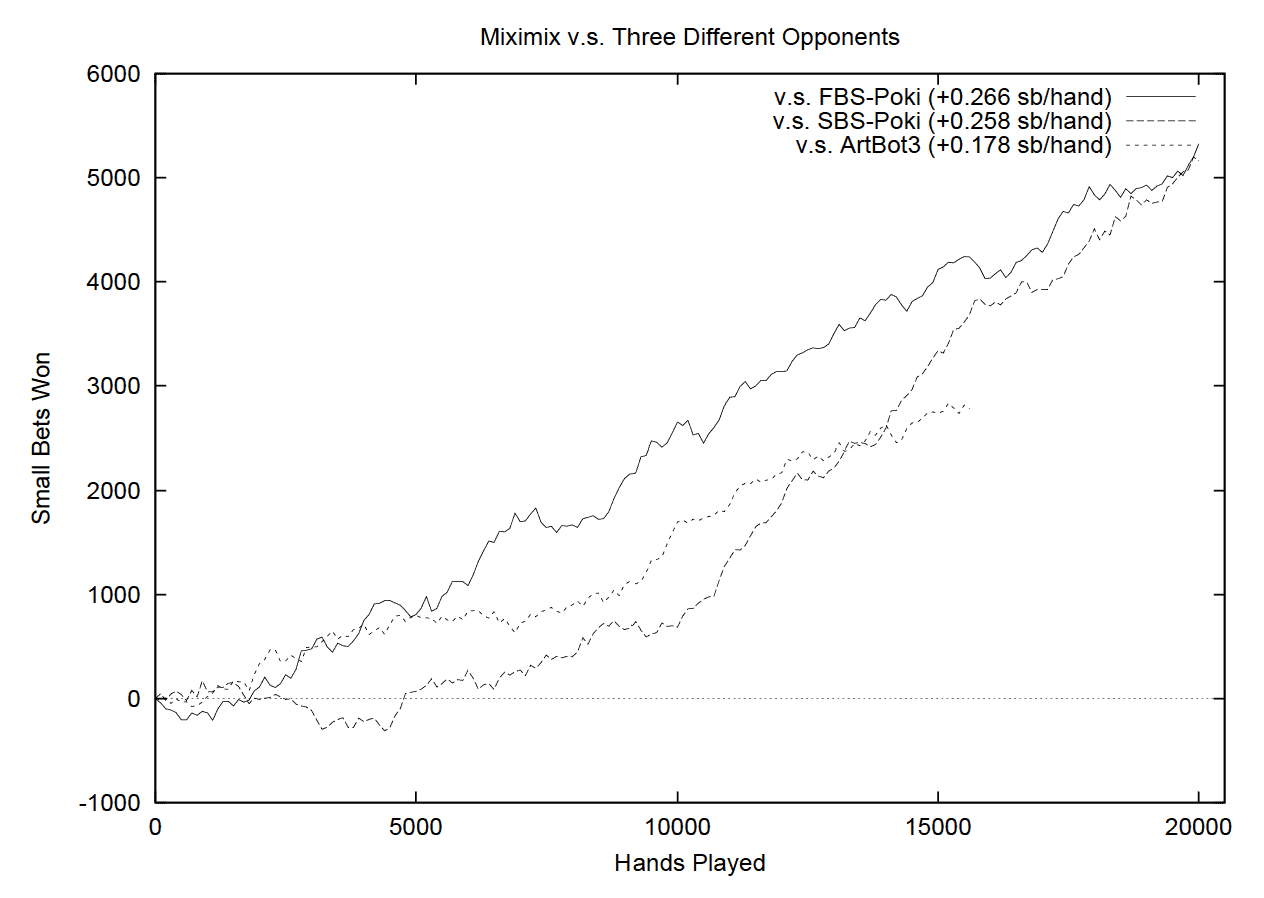

Davidson compared Miximax-based betting approach against three other programs: FBS-Poki, SBS-Poki, and ArtBot. ArtBot, as it turns out, is a really loose player, almost passive. Imagine playing against someone who is difficult to read because they are defensive and unpredictable. Winning against ArtBot results in little pots, not the massive victories you might hope for. ArtBot outperforms FBS-Poki, who is a bit of a pushover, with roughly +0.35 small bets per hand.

It’s like having a variety of sparring partners to put your movements to the test, each with their own unique style. Davidson’s Miximax player was put to the test here, starting from zero and occasionally using pre-built strategy from prior games. This Miximax isn’t your typical min-max player; it’s a Miximix, a slightly modified version that doesn’t always go for the highest EV move. Consider it as adding a little randomness to avoid becoming too predictable – a necessary quirk to keep things interesting and open up all options.

So, what are the results? Miximax suffers a little during the first few thousand hands, much like a new student navigating their first few games. However, once it understands the opponent’s playing style, it begins to accumulate chips. Compared to the FBS and SBS tactics, it averages +0.4 to +0.5 small bets per hand. ArtBot struggles more, with results ranging from +0.1 to +0.2 small bets every hand. ArtBot’s fast-changing style appears to uncover flaws in Miximax’s context trees.

Here’s an interesting part of the thesis: Davidson adds that this is only scraping the surface. The AI needs to learn faster, either by enhancing the context trees or taking ideas from previous opponent models. The biggest challenge is expanding this to multiplayer games, where the game tree becomes extremely complex. Davidson implies that managing more players may be difficult unless some major trimming is done. This was difficult to manage when desktop PCs could only run at 1GHz and 4GB RAM was considered a lot of capacity. Now it’s not an issue.

I recall this brilliant ending in which Davidson quotes Josh Billings: “Life consists not in holding good cards, but in playing well those you do hold”. It serves as a reminder that in poker, as in life, making the most of what you have frequently outweighs pure luck.

There’s this graph that displays how Miximax performs versus various opponents. It’s very clear that as the AI learns more about its opponents, it adjusts and improves its win rate. It’s unstable at first, but it settles down as it collects more data, much like how humans adapt via experience.

So, Davidson’s work demonstrates that developing a poker AI is more than just crunching numbers. It’s about navigating through a veil of uncertainty and making educated estimates based on trends and probabilities. If you enjoy poker and AI, or simply seeing how machines can play games, this is a must-read.